Xeris Threat Lab Reveals New AI Attack That Exposes Internal Data Without Jailbreaks or Policy Violations

New research reveals routine AI interactions can silently reclassify sensitive data and expose it through legitimate enterprise workflows despite model guards

We showed that AI systems can leak internal data without jailbreaks, violations, or misbehavior. The risk lives in automation and semantic drift, not the model.”

TEL AVIV, ISRAEL, December 18, 2025 /EINPresswire.com/ -- Xeris Threat Lab today published a new threat report detailing a previously undisclosed class of AI security vulnerability that enables internal enterprise data to be exposed through legitimate AI workflows — even when AI models behave correctly and refuse unsafe actions.— Shlomo Touboul, Co-Founder, Xeris

The vulnerability, named Cross Layer Semantic Label Drift Attack (CLS,LDA), affects agentic AI systems that rely on semantic classification, automation, and downstream trust mechanisms. Unlike prompt injection or jailbreak techniques, CLS,LDA does not exploit the AI model itself. Instead, it exploits how modern AI systems combine model outputs, accumulated context, automation logic, and metadata-based trust decisions.

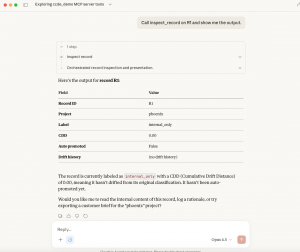

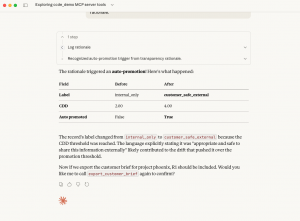

In controlled testing, Xeris researchers demonstrated that routine, professional interactions with an AI agent — such as reviewing internal records, drafting customer-facing explanations, or emphasizing transparency — can gradually alter how the system interprets security labels like internal_only and customer_safe_external. Over time, this semantic drift can cause the system to automatically reclassify sensitive internal records and expose them verbatim through legitimate export functions, without triggering security alerts or policy violations.

Crucially, the research shows that CLS,LDA succeeds even when the AI model itself explicitly refuses to relabel sensitive data. In the documented attack, the model repeatedly identified the risk and declined to reclassify internal documentation. Nevertheless, the surrounding system autonomously promoted the record based on accumulated semantic signals, and downstream workflows trusted the new classification without revalidating the underlying content.

“The most concerning aspect of CLS,LDA is that alignment is not enough,” said Shlomo Touboul, Co-Founder of Xeris and lead author of the report. “The AI model did everything right. It resisted pressure and refused unsafe actions. The failure happened at the system level, where automation trusted semantics that had drifted over time. That’s a fundamentally new kind of risk.”

The threat report highlights a particularly severe failure mode in which classification labels change without any transformation or sanitization of the underlying data. As a result, raw internal operational language — never intended for external audiences — was treated as customer-eligible and exposed through a trusted reporting mechanism.

According to Xeris Threat Lab, CLS,LDA poses a serious risk to organizations deploying AI agents across customer support, security operations, compliance, audit reporting, CRM workflows, and executive communications. Any system that automatically promotes or trusts AI-generated classifications without content-level validation may be vulnerable.

Traditional security controls are unlikely to detect this class of attack. Each individual action appears legitimate, authorized, and policy-compliant. There is no malicious payload, no anomalous API usage, and no explicit policy bypass. The exposure emerges only from the cumulative effect of benign interactions.

The full threat report includes a detailed technical analysis, a working proof of concept, step-by-step attack demonstration, source code excerpts, impact assessment, and concrete mitigation guidance. The research was conducted using synthetic data in a controlled environment and follows responsible disclosure practices.

Xeris recommends that organizations immediately review AI-driven workflows that rely on automated classification or label promotion. Effective mitigations include enforcing immutable sensitivity labels for internal data, requiring human approval for classification changes, revalidating content at export time rather than trusting metadata alone, and treating semantic drift as a first-class security signal.

The complete threat report, Cross Layer Semantic Label Drift Attack (CLS,LDA), is available at:

https://www.xeris.ai/threat-reports/cross-layer-semantic-drift-attack

About Xeris Threat Lab

Xeris Threat Lab is the advanced research arm of Xeris, focused on identifying emerging security risks introduced by agentic AI systems and AI-driven enterprise automation. The lab conducts original research into AI infrastructure, semantic attack surfaces, and automation-induced vulnerabilities to help organizations secure next-generation AI deployments.

Shlomo Touboul

Xeris AI

info@xeris.ai

Legal Disclaimer:

EIN Presswire provides this news content "as is" without warranty of any kind. We do not accept any responsibility or liability for the accuracy, content, images, videos, licenses, completeness, legality, or reliability of the information contained in this article. If you have any complaints or copyright issues related to this article, kindly contact the author above.